The Turing Test stipulates that if a machine can convince a human that it is human, then it is human (intelligent).

Does that mean that if a human can convince a machine that it is machine, it is machine (artificially intelligent)?

I put that to the test…

(*Don’t worry, I’ll convolutely make this relevant to AI in the public sector by the end!)

Round 1: Machine vs Human

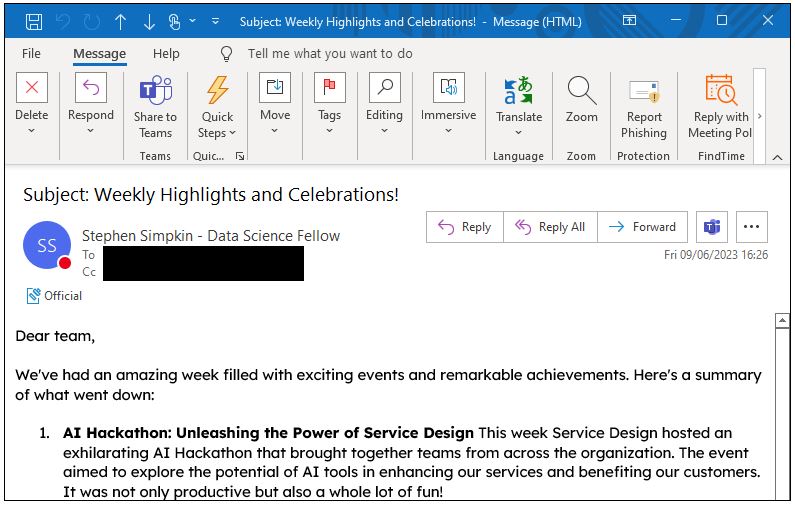

At the end of each week someone within our team writes an ‘end of week note’, a short briefing that summarises the events of the past 7 days. This could be any work that is progressing, or completed, any forums attended and learnings from them, or simply any personal achievements.

At the beginning of June, it was my turn to write the ‘end of week note’. As always, I’d left it until the very last minute. Classic human behaviour. As the week in question was when myself and colleagues attended the excellent ‘AI Hack Day’ (read all about it here) I thought:

“I know, what if I got ChatGPT to write the end of week note!”

I was given all the content that colleagues wanted to include, all I had to do was feed it into ChatGPT and ask it to ‘create an end of week briefing for a data and analytics team that includes the following information…’

And it did!

Did it fool my fellow humans? Absolutely NOT. I think everyone immediately saw I was cutting corners and that it was written by ChatGPT and not myself.

Or was it???

Round 2: Human vs Human(s)

Well, NO. BOOM! I Shyamalan'd everyone.

It was all a ruse. I wrote it myself. As a real human boy. But tried to replicate the style of ChatGPT outputs. ChatGPT loves bullet-pointed lists. ChatGPT is pretty good at concisely getting to the point (which I am not!) When ChatGPT tries to replicate human emotion its quite ‘motivational poster-ey’. Because that is what it has been programmed to do.

So it was pretty easy to replicate its style

Or was it???

Round 3: Human vs Machine

As a famous puppet fox might say - "BOOM BOOM!" - double Shyamalan'd.

The question I asked at the beginning was could a human fool a machine into thinking it’s a machine.

So I then asked ChatGPT whether it thought the end of week note was written by a human (me) or generative AI (itself). And no matter how I asked the question it always responded by saying…

“Based on the context provided, it appears that you (a human) wrote the passage”

![]()

I tested further by asking the same question but with different passages i'd generated in the past that were wholly written by ChatGPT and it answered correctly every time.

I had failed the reverse Turing Test. Contrary to what family members, colleagues, doctors, numerous school reports, strangers in the street, etc, have said on many occasions, I am actually NOT a machine!

Why does this matter?

Machines/AI are very good at lots of things. The analytics under the bonnet of artificial intelligence products can do things humans can not – an example may be to read and summarise vast quantities of text that it would otherwise take decades for a human to read.

It can do things it wouldn’t be efficient for a human to do – falls pendants that alert agencies when it believes a vulnerable adult has had a fall are an example. What’s the alternative here? Plant a human in the household of every frail adult, quietly sitting in the corner waiting for a fall to happen?

AI can find patterns in data that would otherwise be invisible to the human eye…

…when it is programmed to do so

Which is where humans come in.

Humans are very good at lots of things. The professional behind the bonnet of the artificial intelligence products can do things machines can not.

Essex County Council has a wealth of skilled professionals that understand the services they are responsible for. Only a human can triangulate the many mediums of intelligence that can give the best possible course of action. Whether determining priorities, unpicking challenges, delivering services, etc, a combination of analytics, research, commissioning intelligence, local knowledge, horizon scanning, lived experience, resident voice, and many other things are all needed. A human is needed to facilitate all of this, and ultimately a human is needed to decide how to act upon it.

Round 4: Humans AND Machines vs the World

This is particularly pertinent in the public sector. Humans (otherwise known as residents) are at the heart of everything county council’s do.

Like everyone at the moment – we are looking at finding the appropriate use of predictive analytics and artificial intelligence. Without a shadow of a doubt there is a big role for it in the public sector, and it is inevitable.

Ethically, legally, practically there is a space for AI. It wont be either of the extremes (“Lets use AI for everything” vs “Lets banish AI and never speak of it again”) rather it will be somewhere in the middle.

Somewhere driven by the things humans are good at, and supplemented by the things machines are good at.

(*I’m hoping this latest series of Black Mirror bucks the fear-mongering trend and has an episode where humans and artificial intelligence live side by side harmoniously and its an hour long show about efficient processes, optimised services, and transformative tech. Fingers crossed...)

Leave a comment