On 26th June colleagues from Essex County Council, Essex Police, and University of Essex took ecda to the Catalyst Conference. This event reflected on the work of the Catalyst Project, showcasing the progress of the collaborative initiatives and highlighting the future potential for analytics in local government.

ecda partners came together to facilitate a series of discussions formed around the 3 key components of ecda;

Data + People + Action = Essex Centre for Data Analytics

This is the first instalment of the trilogy…………..’DATA’

DATA: By Stephen ‘Data’ Simpkin

A good trilogy only comes to be if the first instalment is a standalone box office smash. There is no way there would have been a Godfather 3 if the first movie ended in a dance-off.

And whilst I’m not saying that ‘data is the most important part of said trilogy’, I will argue that without ‘data’ there is no ecda! Data is the Don Corleone of the trilogy.

A consistent theme throughout the Catalyst Conference was highlighting the many benefits for big-data collaboration.

“Organisations working together to tackle a shared issue”

“Partners sharing skills, tools, resource, & best practice, to develop capabilities”

“Generating ideas, innovation, and excitement”

Another re-occurring theme (and one that many people in this world will recognise) was the difficulty in bringing together data. Not just in the literal sense, but the governance, cultural, and ethical challenges and considerations.

In small groups we discussed (a) the top 3 challenges that our organisations face – and (b) some potential ways of tackling these.

*I am very conscious that we ran out of time before I had the chance to (c) reflect on some of our experiences and recommendations for overcoming said challenges, so effectively I just released a load of angry bees into a room and walked out without opening any windows! I’ll try and open a few metaphoric windows here!

We all find ourselves in naturally risk averse organisations. There is fear (and often suspicion) surrounding the mere suggestion that ‘our’ data should ever leave ‘our’ organisation. In an earlier workshop the excellent James Cornford from the University of East Anglia highlighted “no one ever got sacked for NOT sharing data”. And ‘not’ sharing data is the norm. We not-share data all the time. I’m doing it right now. I just not-shared data again. It’s easy. And it’s never going to lead to a 20 billion trillion gazillion pound fine.

So whilst a huge part of this fear is an ingrained behaviour, some will be down to a lack of trust. A lack of trust and understanding in the systems and governance that are supposed to facilitate data sharing. And also perhaps a lack of trust in each other? I think we would all admit that we are a little possessive/protective over our internal data, and our SME of this data. Data collaboration doesn’t undermine this in anyway, it gives an opportunity to share and expand knowledge.

Building relationships and trust amongst partners is critical. A harmonious way of working together can’t be mandated, it has, in part at least, to develop organically. In ecda we have been seeking regular opportunities for analysts across the partnership to work and learn together in a shared environment.

Outside of the above cultural barriers – the ‘data’ itself can be problematic (for want of a better word). The systems we use for capturing information were not necessarily designed with a view that ‘one day we may want to make large bespoke extractions’! They are typically ‘case’ management systems, not ‘data’ management systems. In short, it’s not always easy to retrieve data (in the way you want, covering the period you want). And smaller organisations often don’t possess the data management skillsets for retrieval.

Once overcoming that hurdle, and assuming the data is robust (I don’t have time to delve into data quality/reliability!), the next step is potentially the trickiest and most underestimated element of big-data collaborations. Joining data. This isn’t about the pseudonymisation processes – these are simple and nice people have already created quick and easy ways of doing this in a safe and secure way, neither is it because of the data prep/cleansing (which in itself has the potential to be an arduous task), it is the physical exercise of ‘joining data’.

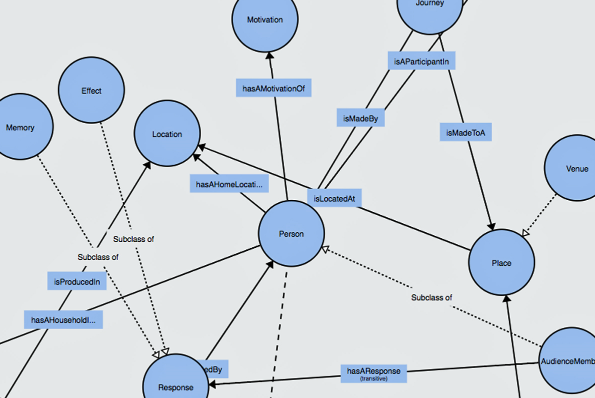

Just because people have agreed to join data doesn’t mean it joins nicely. Data ontology is a hugely important factor – an appreciation of the different types of data is a must. You can’t just throw two+ different datasets into a pot and hope it all ties together nicely! Take my fictitious example of Mr. Paul. E. Mann. He has visited his GP 8 times in the last 4 years for various ailments, one of which resulted in a hospital referral. On top of this he has had 3 hospital admissions, 1 via A&E, and 1 unplanned. He has called 111 five times, and used the ambulance service once. He has 2 live social care domiciliary packages, but these have changed considerably over time (10 different packages). He has moved house once, and GP twice in this time. Mr. Paul. E. Mann has several limiting illnesses/conditions, is male, over 75, and lives alone (after his partner, & informal carer, sadly passed away 2 years ago).

Even if there is a common identifier across all these systems (pseudo NHS number) – how do you even begin to collate all these episodes and demographics?!?!? And this is just ONE person!

I wish I had a magic answer! But the truth is that there is no single flat-packed set of rules or guidelines I can give. I guess it largely depends on what action you are hoping to take – which dictates the analysis you wish to execute – which should therefore drive the nature of the ‘join’.

Traditionally analysts might have seen data as static 2d tables. Data collaboration exercises tend to create ‘datacubes’ – a multi-dimensional array of inter-connected values. I can see that Paul. E. Mann exists in our social care records, and also the records of hospital flows. But for my analytical purposes I will need to perform some sort of data aggregation.

(For example, if I want to see if it’s possible to predict his (and others) unplanned hospital admission(s) I may wish to look at all the events across all datasets that occurred in the months leading up to the admission).

It’s tricky to even explain let alone carry out! *But also never underestimate people’s willingness to talk about successes they have had in challenging areas! We have learnt a lot from speaking with other public (and private) sector organisations and are happy to talk about our own experiences.

A final challenge identified was the self-perceived lack of skills – and the technical requirements for big data management, analytics, and data science. Our respective teams are incredibly skilled and have been delivering high quality insight for decades. And our county has improved as a result of this insight. The term ‘data science’ only really became a popular turn-of-phrase in 2012 when the Harvard Business Review called data science the ‘sexiest job of the 21st century’ (as ‘Data Science Fellow’ I guess that makes me the Scarlett Johansson/Brad Pitt [delete as appropriate] of local government analytics). So most of the people within our teams didn’t initially know they were signing up for such sexy work!!!

Joking aside – in the last 2-3 years there has been unbelievable changes in the world of analytics. Data is bigger, and there is more of it than ever. The tools and software for processing data are quicker, easier to use, and more powerful - enabling complex methods to become more mainstream. And most importantly – the perceived value of data and analytics has gone through the roof (the data revolution is here people)!

But the ‘mainstreaming’ of methods popular in machine learning (such as logistic regression) doesn’t in any way diminish the core problem solving skills we all have in spades. The most powerful insight can come from the simplest command.

I do strongly feel that if we have gone through the rigmarole of joining data (as discussed earlier) then we should be making the most value of the outputs. And this may require our teams to learn the theory and practice of some new methods.

We are trying to create an environment across our ecda partnership to meet the various preferred learning styles, and appreciate that some of these methods can take time to master. Our learning also isnt purely about technical development, as there are many softer analytical skills we also want to finesse. There is no way a group of humans can develop at the same pace as the meteoric evolution that analytics has experienced – but we are finding 2 or 3 things each year (pertinent to our workplan) to focus our learning around. We have built a great support network (particularly grateful to the ESRC and UoE) and are confident in our collective abilities for giving actionable insight.

So that’s ‘data’ - itself a catalyst for change.

Look out for ‘people’ and ‘action’ coming soon

Leave a comment